Figuring out study plan with Web Scrapping

The first time I went into the University website to browse through the course, I had difficulties in finding the course I should study first. Although the courses are in categories, the pre-requisites are not explicitly displayed. I have to click through each subject, browse the content and take note of the prerequisite on paper.

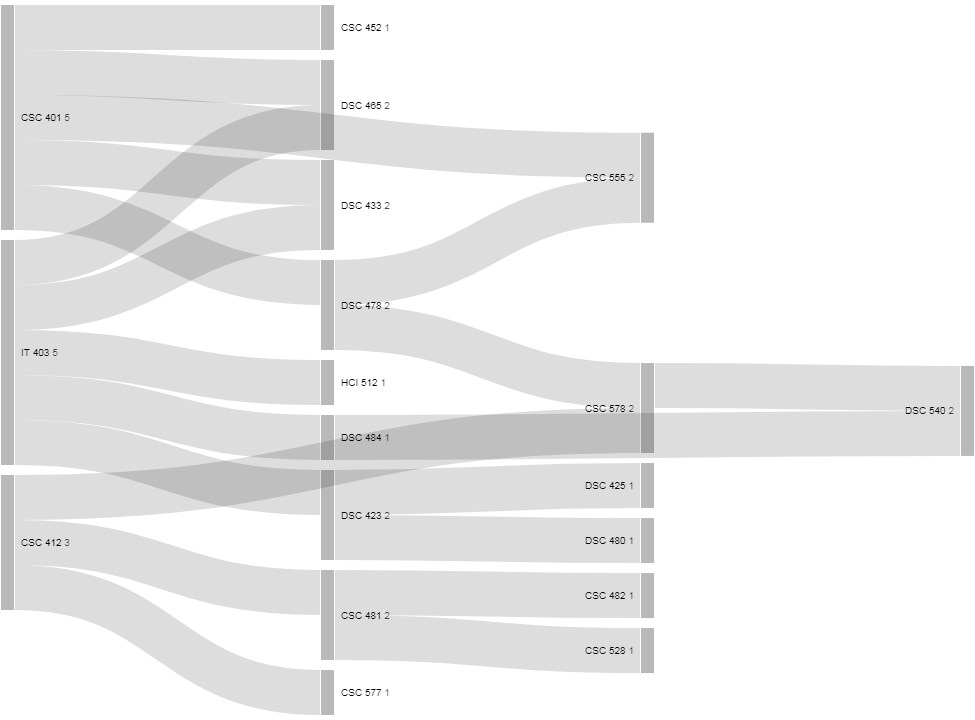

I think having a simple list that displays the course name with the prerequisite would help students to better plan their course so I decided to Scrape this data from the school website and create a visualization that is more user friendly.

To do this, I will use the most common parsing library, BeautifulSoup

import requests

from bs4 import BeautifulSoup

First, we want a list of all majors. I will start all master programs in the School of Computing and Digital Media.

links = []

link='https://www.cdm.depaul.edu/academics/Pages/MastersDegrees.aspx'

r=requests.get(link)

soup = BeautifulSoup(r.text, 'html.parser')

# content = soup.findAll("div", {"class": "Index-Item"})

content = soup.findAll("ul", {"class": "dropdown-menu"})

uls = BeautifulSoup(str(content), 'html.parser')

for a in uls.findAll('a'):

links.append('https://www.cdm.depaul.edu'+a['href'])

# no dropdown > "btn-requirements"

for item in soup.findAll("a", {"class": "btn-requirements"}):

link = (item['href'])

if('/academics/Pages/Current/Requirements' in (str.split(link,'-'))):

links.append('https://www.cdm.depaul.edu'+link)

links

Here is the list of url that has the requirements:

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MA-In-Animation-Motion-Graphics.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MA-In-Animation-Technical-Artist.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MA-In-Animation-Traditional-Animation.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MA-In-Animation-3D-Animation.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MS-in-Cybersecurity-Computer-Security.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MS-in-Cybersecurity-Compliance.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MS-in-Cybersecurity-Networking-and-Infrastructure.aspx

https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MS-In-Data-Science-Computational-Methods.aspx

...

Next we get the list of subjects

links=[]

subjects = []

link = 'https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MS-In-Data-Science-Computational-Methods.aspx'

r=requests.get(link)

soup = BeautifulSoup(r.text, 'html.parser')

tds = soup.findAll("td", {"class": "CDMExtendedCourseInfo"})

for td in tds:

# print(td.text)

subject,courseno=str.split(td.text)

# print('https://www.cdm.depaul.edu/academics/pages/courseinfo.aspx?Subject='+subject+'&CatalogNbr='+courseno)

links.append('https://www.cdm.depaul.edu/academics/pages/courseinfo.aspx?Subject='+subject+'&CatalogNbr='+courseno)

subjects.append(td.text)

subjects

['IT 403', 'CSC 412', 'CSC 401', 'DSC 423', 'CSC 555', 'CSC 521', 'CSC 575', 'CSC 578', 'DSC 425', 'DSC 433', 'CSC 452', 'DSC 465', 'DSC 478', 'CSC 481', 'CSC 482', 'DSC 480', 'CSC 521', 'CSC 528', 'DSC 540', 'CSC 543', 'CSC 555', 'CSC 575', 'CSC 576', 'CSC 577', 'CSC 578', 'CSC 594', 'CSC 598', 'DSC 484', 'GEO 441', 'GEO 442', 'GPH 565', 'HCI 512', 'IPD 451', 'IS 549', 'IS 550', 'IS 574', 'IS 578', 'MGT 559', 'MKT 555', 'MKT 530', 'MKT 534', 'MKT 595']

Now we can query the course catalogue for the prerequisites.

def get_course_req(link):

subjects = []

r=requests.get(link)

soup = BeautifulSoup(r.text, 'html.parser')

print(soup.title.text.strip())

tds = soup.findAll("td", {"class": "CDMExtendedCourseInfo"})

for td in tds:

subject,courseno=str.split(td.text)

links.append('https://www.cdm.depaul.edu/academics/pages/courseinfo.aspx?Subject='+subject+'&CatalogNbr='+courseno)

subjects.append(td.text)

return list(set(subjects))

link='https://www.cdm.depaul.edu/academics/Pages/Current/Requirements-MS-In-Computational-Finance.aspx'

get_course_req(link)

def get_prereq(subjects):

PRE = []

for subject in subjects:

Subject,CatalogNbr=str.split(subject)

link='https://www.cdm.depaul.edu/academics/pages/courseinfo.aspx?Subject='+Subject+'&CatalogNbr='+CatalogNbr

r=requests.get(link)

soup = BeautifulSoup(r.text, 'html.parser')

pageContent = soup.find("div", {"class": "pageContent"})

pageContent = pageContent.find('p')

if(pageContent):

if(pageContent.text.lower().rfind("prerequisite")>0):

prereq = pageContent.text.lower()[pageContent.text.lower().rfind("prerequisite"):]

if('none' not in str.split(prereq)):

prereq = prereq_parser(prereq.upper(),subjects)

PRE.append([subject,prereq])

else:PRE.append([subject,[]])

else:PRE.append([subject,[]])

else:

PRE.append([subject,[]])

return PRE

print(get_prereq(['CSC 540','CSC 471','CSC 242']))

Now we get the prerequisite in a list of list

[['CSC 540', ['CSC 471']], ['CSC 471', []], ['CSC 242', []]]

Final step is to visualize this in a sankey diagram